7:30 AM

7:45 AM

8:00 AM

8:15 AM

8:30 AM

8:45 AM

9:00 AM

9:15 AM

9:30 AM

9:45 AM

10:00 AM

10:15 AM

10:30 AM

10:45 AM

11:00 AM

11:15 AM

11:30 AM

11:45 AM

12:00 PM

12:15 PM

12:30 PM

12:45 PM

1:00 PM

1:15 PM

1:30 PM

1:45 PM

2:00 PM

2:15 PM

2:30 PM

2:45 PM

3:00 PM

3:15 PM

3:30 PM

3:45 PM

4:00 PM

4:15 PM

4:30 PM

4:45 PM

5:00 PM

5:15 PM

5:30 PM

5:45 PM

6:00 PM

6:15 PM

6:30 PM

6:45 PM

7:00 PM

June 12

Registration/Check-in

7:30 AM - 7:45 AMContinental Breakfast

7:45 AM - 8:15 AMOpening Remarks

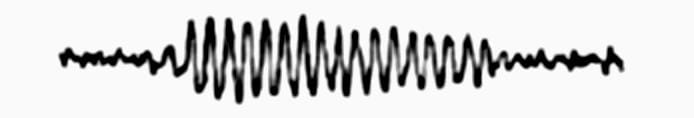

8:15 AM - 8:30 AMValidating speech-related FFR in a novel paradigm using simultaneous M/EEG recording

Tzu-Han Zoe Cheng 8:30 AM - 9:00 AMUsing Volumetric Mesoscopic Electrophysiology to Determine the Neural Origins of Envelope Following Responses in the Non-Human Primate

Tobias Teichert 9:00 AM - 9:30 AMCoffee Break, vendors + posters

10:00 AM - 10:30 AMMultichannel Methods for Rapid and High Quality Frequency Following Responses

Nike Gnanateja 11:30 AM - 12:00 PMMaturation of the frequency-following response (FFR) during early infancy: a longitudinal study

Alejandro Mondéjar-Segovia 12:00 PM - 12:30 PMLunch, vendors + posters

12:30 PM - 1:30 PMNeonatal Speech Sound Encoding: Exploring the influence of prenatal corpus callosum development through neurosonography and EEG

Natàlia Gorina-Careta 2:30 PM - 3:00 PMCoffee Break, vendors + posters

3:00 PM - 3:30 PMPhysiological mechanisms contributing to atypical pragmatics in autism: A study of neural speech perception and speech-motor articulation

Janna Guilfoyle 3:30 PM - 4:00 PMWhat does the FFR reveal about speech processing in autism? New insights from a prospective longitudinal study of infants at elevated familial likelihood

Rachel Reetzke 4:00 PM - 5:00 PMWelcome Reception

5:00 PM - 7:00 PMJune 13

Registration/Check-in

7:30 AM - 7:45 AMContinental Breakfast

7:45 AM - 8:30 AMSensing echoes in the auditory brainstem of rodents to detect learning-induced neuroplasticity

Aysegul Gungor Aydin 8:30 AM - 9:00 AMCochlear Microstructure and Frequency following responses in individuals with musical training

Rucha Vivek 9:00 AM - 9:30 AMShort- and long-term neuroplasticity interact during the perceptual learning of concurrent speech

Jessica MacLean 9:30 AM - 10:00 AMCoffee Break, vendors + posters

10:00 AM - 10:30 AMWhat we can learn about auditory circuits from responses that follow stimulus frequency - and those that do not

Srivatsun Sadagopan 10:30 AM - 11:30 AMPhonetic categories in speech emerge subcortically: Converging evidence from the frequency-following response (FFR)

Gavin Bidelman 11:30 AM - 12:00 PMDuplex perception reveals brainstem auditory representations are modulated by listeners' ongoing percept for speech

Rose Rizzi 12:00 PM - 12:30 PMLunch, vendors + posters

12:30 PM - 1:30 PMThe Contribution of Brainstem Auditory Centers to Speech Processing Deficits in Early Psychosis: A Frequency-Following Response Study

Fran López-Caballero 2:30 PM - 3:00 PMCoffee Break, vendors + posters

3:00 PM - 3:30 PMRoundtable Discussion

5:30 PM - 6:15 PMPosters

6:15 PM - 7:00 PMJune 14

Registration/Check-in

7:30 AM - 8:00 AMHands-On Session on FFR data collection and analyses

8:00 AM - 10:45 AMWalk to lunch

10:45 AM - 11:00 AMNetworking Lunch and Workshop Conclusion

11:00 AM - 1:00 PMFeatured Speakers

Dr. Samira Anderson

University of Maryland

Clinical Applications of the FFR for Older Listeners

For decades, Audiologists have relied on the audiogram as the gold standard for hearing loss diagnosis. The audiogram is most effective for differentiating between sensorineural and conductive hearing loss, but it falls short in providing the information needed to predict an individual’s ability to understand speech in complex listening environments. Growing awareness of pathologies or deficits beyond the cochlea has led to efforts to incorporate new clinical measures that are sensitive to these deficits. The frequency-following response (FFR) provides a measure of the auditory system’s temporal precision and can therefore be used to evaluate neural speech processing. This presentation will discuss clinical applications of the FFR in the older listener with respect to identifying sources of listening difficulties, assessing hearing aid benefits, and monitoring outcomes of intervention.

Dr. Fuh-Cherng Jeng

Ohio University

Machine Learning and Frequency Following Responses: A Tutorial

The human frequency-following response (FFR) offers a valuable lens into the intricacies of auditory stimulus processing within the brain. This presentation serves as a guide, extending from basic principles to practical implementations of machine learning techniques applicable to FFR analysis. Delving into various supervised models such as linear regression, logistic regression, k-nearest neighbors, and support vector machines, alongside an exploration of the unsupervised realm with k-means clustering, this presentation will attempt to illuminate their applications and discuss the nuances of their utilization.

Beyond these, we will navigate through an array of machine learning tools, encompassing Markov chains, dimensionality reduction, principal components analysis, non-negative matrix factorization, and neural networks. The talk emphasizes a nuanced understanding of each model’s applicability, pros, and cons, recognizing the pivotal role of factors like research questions, FFR recordings, target variables, and extracted features.

To enhance comprehension, a Python-based example project will be presented, showcasing the practical application of several discussed models. Drawing insights from a sample dataset featuring six FFR features and a target response label, this tutorial equips researchers with the knowledge needed to judiciously choose and apply machine learning methodologies in unraveling the mysteries embedded in human auditory processing.

Dr. Rachel Reetzke

Kennedy Krieger Institute

The Johns Hopkins University School of Medicine

What does the FFR reveal about speech processing in autism? New insights from a prospective longitudinal study of infants at elevated familial likelihood

Autism spectrum disorder (ASD) is associated with atypical speech processing early in life, which can have negative cascading effects on language development, one of the strongest predictors of long-term outcomes. While the frequency-following response (FFR)—a sound-evoked potential that reflects synchronous neural activity along the auditory pathway—has revealed speech processing differences in children and adolescents on the autism spectrum, what has yet to be established is the extent to which such differences are present during the ASD prodromal period. Investigating speech-evoked FFRs in infant siblings of children with ASD (infants at elevated familial likelihood for autism [EL infants]) has the potential to reveal the earliest time window when neural function related to speech processing may begin to diverge. In this talk, I will present results from a prospective, longitudinal study characterizing the developmental time course and the predictive value of the FFR to speech sounds in EL infants compared to infants with a typical likelihood for developing ASD. Findings reveal that the FFR may be sensitive to subtle speech processing differences in EL infants within the first year of life, well before the emergence of behavioral precursors. This study will set the stage for a broader discussion of the utility of the FFR for characterizing speech processing differences and in predicting distal language and social communication outcomes across the range of heterogeneity associated with the autism phenotype.

Dr. Srivatsun Sadagopan

University of Pittsburgh

What we can learn about auditory circuits from responses that follow stimulus frequency – and those that do not.

Frequency-following responses (FFRs) to speech stimuli are non-invasive and easily deployable measurements of speech encoding integrity in the auditory system that show great potential as a biomarker for many pathological conditions. But because fundamental questions remain with respect to how the activity and modulation of underlying neural circuits affect speech FFRs, interpreting and attributing changes in FFRs to particular circuit elements is challenging. As a first step towards gaining more biological insight into these issues, our lab has been involved in a collaborative effort to determine the extent to which speech FFRs and their response properties are conserved in an animal model, setting the stage for future mechanistic experiments. In this talk, I will present results from the guinea pig, a rodent with excellent low-frequency hearing, that demonstrate that FFRs to speech sounds show remarkable similarities with those recorded in humans and monkeys. I will discuss how stimulus fundamental frequency, stimulus statistics, arousal state, and manipulation of auditory cortical activity affect FFR amplitudes and fidelity in this species. Using intracranial translaminar recordings, I will discuss our initial efforts to map scalp recordings to neural ensemble activity. Finally, I will present early studies on non-stimulus-following intrinsic oscillatory activity in the auditory cortex, and discuss what these may reveal about underlying circuits.